The original question was: Recently I’ve come across a task to calculate the probability that a run of at least K successes occurs in a series of N (K≤N) Bernoulli trials (weighted coin flips), i.e. “what’s the probability that in 50 coin tosses one has a streak of 20 heads?”. This turned out to be a very difficult question and the best answer I found was a couple of approximations.

So my question to a mathematician is: “Why is this task so difficult compared e.g. to finding the probability of getting 20 heads in 50 coin tosses solved easily using binomial formula? How is this task in fact solved – is there an exact analytic solution? What are the main (preferably simplest) approximations and why are they used instead of an analytic solution?”

Physicist: What follows was not obvious. It was the result of several false starts. It’s impressive in the same sense that a dude with seriously bruised legs, who can find anything in his house with the lights off, is also impressive. If you’re looking for the discussion of the gross philosophy, and not the detailed math, skip down to where you find the word “Jabberwocky” in bold. If you want specific answers for fixed, small numbers of coins, or you want sample computer code for calculating the answer, go to the bottom of the article.

The short answer: the probability, S, of getting K or more heads in a row in N independent attempts (where p is the probability of heads and q=1-p is the probability of tails) is:

Note that here is the choose function (also called the binomial coefficients) and we are applying a non-standard convention that

for

which makes the seemingly infinite sums always have only a finite number of terms. In fact, for N and K fixed, the answer is a polynomial with respect to the variable p.

Originally this was a pure math question that didn’t seem interesting to a larger audience, but we both worked for long enough on it that it seems a shame to let it go to waste. Plus, it gives me a chance to show how sloppy (physics type) mathematics is better than exact (math type) mathematics.

Define {Xi}j as the list of results of the first j trials. e.g., if j=4, then {Xi}j might be “{Xi}4=HTHH” or “{Xi}4=TTTH” or something like that, where H=heads and T=tails. In the second case, X1=T, X2=T, X3=T, and X4=H.

Define “Ej” as the event that there is a run of K successes (heads) in the first j trials. The question boils down to finding P(EN).

Define “Aj” as the event that the last K terms of {Xi}j are T followed by K-1 H’s ({Xi}j = X1X2X3X4…THHH…HHH). That is to say, if the next coin (Xj+1) is heads, then you’ve got a run of K.

Finally, define p = P(H) and q = P(T) = 1-p. Keep in mind that a “bar” over an event means “not”. So “” reads “not heads equals tails”

The probability of an event is the sum of the probabilities of the different (disjoint) ways that event can happen. So:

iv) comes from the fact that . If you have a run of K heads in the first j trials, of course you’ll have a run in the first j+1 trials. v) The zero comes from the fact that if the first j terms don’t have a run of K heads and the last K-1 terms are not all heads, then it doesn’t matter what the j+1 coin is, you can’t have a run of K heads (you can’t have the event Ej+1 and not Ej and not Aj). vii) is because if there is no run of K heads in the first j trials, but the last K-1 of those j trials are all heads, then the chance that there will be a run of K in the first j+1 trials is just the chance that the next trial comes up heads, which is p. ix) the chance of the last K trials being a tails followed by K-1 heads is qpK-1. x) If the last K (of j) trials are a tails followed by K-1 heads, then whether a run of K heads does or doesn’t happen is determined in the first j-K trials.

The other steps are all probability identities (specifically and Bayes’ theorem:

).

Rewriting this with some N’s instead of j’s, we’ve got a kick-ass recursion:

And just to clean up the notation, define S(N,K) as the probability of getting a string of K heads in N trials (up until now this was P(EN)).

S(N,K)=S(N-1,K)+qpK-qpKS(N-K-1,K). We can quickly figure out two special cases: S(K,K) = pK, and S(l,K) = 0 when l<K, and that there’s no way of getting K heads without flipping at least K coins. Now check it:

ii) Plug the equation for S(N,K) in for S(N-1,K). iii-vi) is the same thing. vii) write the pattern as a sum. viii) the terms up to K-1 are all zero, so drop them.

Holy crap! A newer, even better recursion! It seems best to plug it back into itself!

You can keep plugging the “newer recursion” back in again and again (it’s a recursion after all). Using the fact that and

you can carry out the process a couple more times, and you’ll find that:

There’s your answer.

In the approximate (useful) case:

Assume that N is pretty big compared to K. A string of heads (that can be zero heads long) starts with a tails, and there should be about Nq of those. The probability of a particular string of heads being at least K long is pk so you can expect that there should be around E=Nqpk strings of heads at least K long. When E≥1, that means that it’s pretty likely that there’s at least one run of K heads. When E<1, E=Nqpk is approximately equal to the chance of a run of at least K showing up.

Jabberwocky: And that’s why exact solutions are stupid.

Mathematician: Want an exact answer without all the hard work and really nasty formulas? Computers were invented for a reason, people.

We want to compute S(N,K), the probability of getting K or more heads in a row out of N independent coin flips (when there is a probability p of each head occurring and a probability of 1-p of each tail occurring). Let’s consider different ways that we could get K heads in a row. One way to do it would be to have our first K coin flips all be heads, and this occurs with a probability . If this does not happen, then at least one tail must occur within the first K coin flips. Let’s suppose that j is the position of the first tail, and by assumption it satisfies

. Then, the probability of having K or more heads in a row in the entire set of coins (given that the first tail occurred at

) is simply the probability of having K or more heads in a row in the set of coins following the jth coin (since there can’t be a streak of K or more heads starting before the jth coin due to j being smaller or equal to K). But this probability of having a streak of K or more after the jth coin is just S(N-j,K). Now, since the probability that our first tail occurs at position j is the chance that we get j-1 heads followed by one tail, so it is

. That means that the chance that the first tail occurs on coin j AND there is a streak of K or more heads is given by

. Hence, the probability that the first K coins are all heads, OR coin one is the first tails and the remainder have K or more heads in a row, OR coin two is the first tails and the remainder have K or more heads in a row, OR coin three is the first tails and…, is given by:

Note that what this allows us to do is to compute S(N,K) by knowing the values of S(N-j,K) for . Hence, this is a recursive formula for S(N,K) which relates harder solutions (with larger N values) to easier solutions (with smaller N values). These easier solutions can then be computed using even easier solutions, until we get to S(A,B) for values of A and B so small that we already know the answer (i.e. S(A,B) is very easy to calculate by hand). These are known as our base cases. In particular, we observe that if we have zero coins then there is a zero probability of getting any positive number of heads is zero, so S(0,K) = 0, and the chance of getting more heads than we have coins is zero, so S(N,K) = 0 for K>N.

All of this can be implemented in a few lines of (python) computer code as follows:

An important aspect of this code is that every time a value of S(N,K) is computed, it is saved so that if we need to compute S(N,K) again later it won’t take much work. This is essential for efficiency since each S(N,K) is computed using S(N-j,K) for each j with and therefore if we don’t save our prior results there will be a great many redundant calculations.

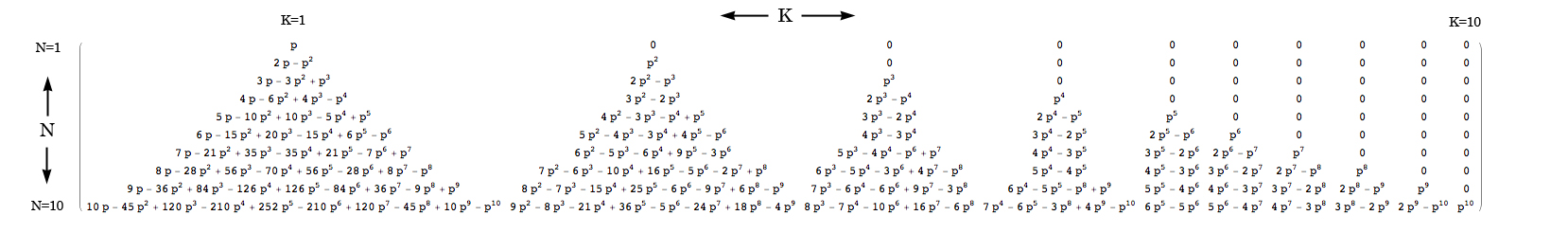

For your convenience, here is a table of solutions for S(N,K) for and

(click to see it enlarged):

![Probability S[N,K] of a streak of K or more out of N independent coin flips.](https://www.askamathematician.com/wp-content/uploads/2010/07/ProbOfStreak.jpg)

Guys, I’m the author of the original question. I already lost hope for an answer. 🙂

Sorry for asking such a difficult question that made you spend so much time on solving it. But your answer is really useful. Thanks a bunch!

Why wouldn’t the following work?

The probability of a run of 15 is 0.5^15

There are n-k+1 ways for a run of length k to occur in a series of length n (think about it)

Therefore 26(0.5^-15)=.000793457

That is for a n=40 and k=15 (see previous)

Your approach does not work because probability(A or B) = probability(A) + probability(B) only when A and B are mutually exclusive (i.e. they can’t both happen at the same time). The problem here is that having a run of 15 in a row starting at position 1 does not rule out the possibility of a run of 15 in a row starting at position 2, and so forth.

Thanks.

Your approach is a good low-probability approximation. If you had p=0.01 instead of p=0.5, for example, then then chance of runs greater than k, and especially the chance of two independent runs of k, would be very small.

Hi,

I am trying to work this out in Basic programming language and therefore writing the sequence down for example S(3,2) which I know gives P = 0.3750, but I don’t succeed.

Now what I do is:

S(3,2) = p^2 + sum_{1,2} p^(j-1)*(1-p)*S(2,2)

with p = 0.5 and S(2,2) = 0.5^2

I get:

S(3,2) = 0.5^2 + sum_{1,2} 0.5^(j-1)*0.5*0.5^2

the sum works out for j = 1: (0.5^0)*0.5*0.5^2 = 0.5^3 and

for j = 2: (0.5^1)*0.5*0.5^2 = 0.5^4

totalling 0.5^2 + (0.5^3 + 0.5^4) = 0.4375??

what am I doing wrong?

thanks

Marc

In the second term of the sum you should have S(1,2), which is zero. Instead you have S(2,2).

Hi Physicist,

You are absolutely right! Thank you.

Marc

wow Physicist and guys, you are amazing.

So is there a simple way to work these out? Yes I mean a CALCULATOR 🙂

Also one more question that is killing me,

If we take a coin flip with p=0.5, so based on the above we know the probabliity of k success in a row over n trials. thats fine.

But what happens if there is another person tossing a different coin, and they toss it in turns, one toss person A and one toss person B.

Now- what is the probablity that both together will have k success in a raw, for example if k=10 than each one should have 5 success in a raw (or more) at the same time.

Now to be precise instead of coin tosses take two independent different events, each one with its own probability (can be same or different), the point is that each event has a different set of rules and hence a different sequences of win/loss.

Thnaks.

Although different approximations apply for different values of p, K, and N, in general the first term from the sums should be fine (p^K+(N-K)qp^K). If not, since they’re alternating sums, you can add up terms until they’re smaller than the error you’re looking for.

In the first case, if the two coins have the same probability, then it doesn’t matter who’s flipping what. If you imagine someone writing down a list of flips, they don’t care where the flips are coming from. The equations in the post still apply to that list.

When you start talking about different events with different probabilities, then things get uncomfortably tricky. The equations in the post are already pretty nasty. The equations for this solution should be about twice as nasty. It takes less time (usually) to write a computer program to run through a couple million samples than to derive an exact solution. And really, who cares about the difference between 62.1% and 62.13%?

would you be able to post the complete python code?

I would like to run it for numCoins = 31.556.926, minHeads=21, headProb=.5

thank you!

Pingback: Q: Is there a formula to find the Nth term in the Fibonacci sequence? | Ask a Mathematician / Ask a Physicist

the source code can be found at http://pastebin.com/cPWHQXUF and below (hopefully):

#!/usr/bin/env python

import sys

def probOfStreak(numCoins, minHeads, headProb, saved=None):

if saved == None: saved = {}

ID = (numCoins, minHeads, headProb)

if ID in saved:

return saved[ID]

else:

if minHeads > numCoins or numCoins <= 0:

result = 0

else:

result = headProb ** minHeads

for firstTail in xrange(1, minHeads+1):

pr = probOfStreak(numCoins-firstTail, minHeads, headProb, saved)

result += (headProb ** (firstTail-1)) * (1 - headProb) * pr

saved[ID] = result

return result

if __name__ == "__main__":

sys.setrecursionlimit(2000)

print probOfStreak(int(sys.argv[1]), int(sys.argv[2]), float(sys.argv[3]))

Mathematician says that “If this does not happen, then at least one tail must occur within the first K coin flips.” Your recursive relationship is built on the assumption that there is a single failure within the string of k successes. How do your take into account the possibilities where multiple failures occur?

Guys I found this post because I’m reading “The Drunkard’s Walk” now and cannot understand one story from it.

Here it goes:

Imagine that George Lucas makes a new Star Wars film and in one test market decides to perform a crazy experiment. He releases the identical film under two titles: “Star Wars: Episode A” and “Star Wars: Episode B”. Each film has its own marketing campaign and distribution schedule, with the corresponding details identical except that the trailers and ads for one film say “Episode A” and those for the other, “Episode B”.

Now we make a contest out of it. Which film will be more popular? Say we look at the first 20,000 moviegoers and record the film they choose to see (ignoring those die-hard fans who will go to both and then insist there were subtle but meaningful differences between the two). Since the films and their marketing campaigns are identical, we can mathematically model the game this way: Imagine lining up all the viewers in a row and flipping a coin for each viewer in turn. If the coin lands heads up, he or she sees Episode A; if the coin lands tails up, it’s Episode B. Because the coin has an equal chance of coming up either way, you might think that in this experimental box office war each film should be in the lead about half the time.

But the mathematics of randomness says otherwise: the most probable number of changes in the lead is 0, and it is 88 times more probable that one of the two films will lead through all 20,000 customers than it is that, say, the lead continuously seesaws”

I must say I fail to see the reason why the leader won’t seesaw! Can you explain please?

The proof of that statement is a bit subtle, but (this is a simplification) it’s a little like saying that it’s unlikely to flip heads, tails, heads, tails, … forever.

This is actually an experiment you can try yourself by flipping coins and keeping track of the score. You’ll find that overall it will tend to wander away from 0. The exact walk is closely modeled by the “Wiener process” (did not make up that name).

Ok, so given that we all now know how to calculate the probability of a run of K in N trials. Can we use that knowledge to calculate the expected number of times we will see a run of K for N trial? e.g. there is an 96% of getting a run of six (heads or tails) or more in 200 trials. So what is the expected number of times we will se a run of 6 or more in 200 trials?

In case anyone is interested, I’ve written JavaScript code to implement the algorithm on this site. It’s at

http://maxgriffin.net/CalcStreaks.shtml

I’d be glad to share it; just drop me an email.

Max you are a wonderful human being.

Pingback: Q: How accurately do we need to know π? Is there a reason to know it out to billions of digits? | Ask a Mathematician / Ask a Physicist

In case anyone else is interested, I translated Max’s Javascript code into a MATLAB function which is posted on the Mathworks file exchange here:

http://www.mathworks.com/matlabcentral/fileexchange/39713

It and it returns the same results as “the mathematician’s” Python function and Max’s Javascript function.

I hope others will find it useful, as I found the code here and at Max’s site.

Thanks for working out the solution!

Pingback: We keep breaking records ? so what ?… Get statistical perspective…. | Freakonometrics

Pingback: 27-Game Streak? For Heat, 50-1 Shot - NYTimes.com

Thank you, nice work!

From your recurrence

S(N,K)=S(N-1,K)+q p^k – q p^k S(N-K-1,K)

and the fact that S(N,K)=0 when N<K you get an upper bound

S(N,K) < (N-K+1) q p^k

(because q p^k S(N-K-1,K) is never negative, and N-K recursions bring you to N<K.

Can this be reversed? For example if a baseball team plays 162 games per year and has a 10 game winning streak, what is the most likely winning percentage for that team?

Pingback: The curse of poor maths skills (oh, alright… Kennett’s curse) | thenewstatsman

Pingback: The curse of poor maths skills (oh, alright… Kennett’s curse) | The New Statsman

Thanks for the great answer. This saved me a lot of time.

I’m using this result in a project I’m working on, and will be published. I therefore want to akcnolwedge the author of this solution.

I would be thankful if you could let me know who to akcnolwedge

Pingback: Why Litecoin is more secure than Bitcoin

I implemented the explicit (non-recursive) form in Mathematica in response to a question on Math Stackexchange, in case anyone is interested:

Pingback: Kevin’s NBA anti-curse (Kennett’s curse revisited) | The New Statsman

If you are looking for a streak of 6 in a population and you have a streak of 7, that would be 2 streaks of 6.

I worked the problem out once in a way that is similar to what Mathematician came up with, but I arrived at a slightly different recurrence relation, and I’m wondering if it’s simply a matter of some fancy algebra to make them equivalent.

My logic was that the chance of a streak of K or greater in a sequence of length N is the sum of the chances of a streak first occurring starting at position i, over all values of i where a streak can start ( i <= N-K+1 ). The first term for i=1 is of course p^K. For each subsequent valid value of i, the chance of a streak starting at toss i is (1-p)p^k since a flip of tails must occur at position (i-1) with a chance of (1-p) in addition to the streak of K heads in a row represented by p^K. For this streak to be the first streak in the trials, there cannot be a previous streak of K occurring anywhere in the first i-2 tosses leading up to the tails toss just prior to the start of the streak at i, for which the chance is (1-S(K,i-2)). So the final recurrence relation I get is this:

S(N,K) = p^K + SUM[i=2,N-K+1](1-p)p^K(1-S(i-2,K))

In LaTeX notation:

$$S(N,K) = p^{K} + \sum_{i=2}^{N-K+1}(1-p)p^{K}(1-S(i-2,K))$$

Thanks guys. Your solution solves a real-world problem I have. Just to connect everyone with reality. My product is different, but imagine this here: I want to sell chains and I have a manufacturer of chains supplying them. The chains I sell have to be 100 parts long without quality issues but the machine producing them is making mistakes at a certain low probability. Question: How likely is it that in a 200-part chain I buy from my supplier there is the needed high-quality 100-part chain that I want to trade (the remainder I’ll dump)? I’ll need Excel code actually

Here’s the VBA code for all those wanting to process it in e.g. Excel

Public Function pStreak(nTries, nHits, p)

Dim c As Collection

Set c = New Collection

pStreak = pS(nTries, nHits, p, c)

Set c = Nothing

End Function

Private Function pS(nTries, nHits, p, c As Collection)

On Error GoTo err

pS = c(CStr(nTries)) 'if nonexisting the exception jumps to err label

Exit Function

err:

If nHits > nTries Or nTries <= 0 Then

result = 0

Else

result = p ^ nHits

For j = 1 To nHits

px = pS(nTries - j, nHits, p, c)

result = result + ((p ^ (j - 1)) * (1 - p) * px)

Next

End If

c.Add result, CStr(nTries) 'we take the string of nTries so the collection index is hashed

pS = result

End Function

How about an R script please. Thanks.

I have been thinking and I’m still thinking. There is a saying that “a problem that is shared between two people is half settled. Now this is my challenge.

For a two way event like in playing for both Total Goals Even and Odd in soccer

following a progression,

1. Is it possible to break a losing streak mathematically so as to start the progression from the beginning again?

2. Is it possible to share part of the losses on a losing streak to see it precipitate as profit on the winning streak without going outside the market and without having a negative balance, assuming the losing streaks were to continue for a two way event running at the same time?

Your response anytime will be much appreciated.

Thanks

Thank you for this!

I ran into problems with stack overflows when N is large. This algorithm is rather easy to convert to an iterative one – S(N, K) only depends on smaller values of N. Here’s an implementation in C++: http://pastebin.com/kqkqA6v9

Is it possible to convert this formula into Excel functions in spreadsheet cells?

This page seems to rank high on google searches for this problem, and doesn’t seem to give a clear and concise answer.

Here’s one that’s much better imo

https://www.reddit.com/r/math/comments/4kj27s/probability_of_getting_n_heads_in_a_row_after_m/d3ftvvw

If you are going to do this computationally, I’d suggest using a matrix and setting this up as a very simple markov chain with one absorbing state. (The matrix is extremely sparse.).

The equation is $A^{n}x =b$

x is an appropriately sized vector filled with zeros, except it’s first entry is 1. (Since A will be 21 by 21, x will need to be 21 x 1).

n (iterations) = 50

the transition matrix A is 21 x 21 (i.e. 0 heads in a row, 1 heads in a row, 2 heads in a row… 19 heads in a row, and the absorbing state of 20 heads in a row).

each entry of the top row (i.e. row 0) has a value of 1 – p_heads — except the final (absorbing) column has a zero there. Then for every column j, if A[j+1, j] exists, A[j+1, j] = p_heads.

Finally, for the absorbing state (i.e. the final column), set the bottom right cell = 1, and the top right cell = 0 as well.

After 50 iterations grab the result in the final cell of b. i.e. b[-1]. I wouldn’t mess around with the eigs. Just iterate through the sparse matrix (e.g. use scipy.sparse and a for loop).

The suggested Python solution (or similar solution in another programming language) will quickly run out of stack due to the recursion. Here’s an equivalent (tested) solution in Java without the recursion:

calculate(10000, 10, 0.5, new HashMap());

static double calculate(final int trials, final int minStreakLen, final double successProb, final Map savedTrialsResultMap) {

for (int tailTrials = minStreakLen; tailTrials <= trials; tailTrials++) {

double probInternal = Math.pow(successProb, minStreakLen);

for (int firstTail = 1; firstTail = minStreakLen; firstTail++) {

final Double previouslyCalculatedProb = savedTrialsResultMap.get(tailTrials – firstTail);

probInternal += Math.pow(successProb, firstTail – 1) * (1.0 – successProb) * previouslyCalculatedProb;

}

savedTrialsResultMap.put(tailTrials, probInternal);

}

return savedTrialsResultMap.get(trials);

}

Pingback: Bernoulli trials macro give's me an error when greater value is introduced

Your closed form summation formula can be obtained directly from the inclusion-exclusion principle. Consider one or more streaks of at least K successes each immediately preceded by a failure. Your second (negated) summation gives the probability that we have at least one such streak. Each term is the probability of T such streaks multiplied by the number of places each one can start (with the failure). This over counts the cases where we have >T streaks, and this over counting is corrected exactly by the succeeding terms via the inclusion-exclusion principle. For example, the first term double counts the cases of 2 streaks, triple counts the cases of 3 streaks, etc. The second term uses the same over counting method to count the cases of 2 streaks which it subtracts from the first term so these are counted correctly, but it also counts the cases of 3 streaks 3 times, the cases of 4 streaks 6 times, etc., so these are now undercounted after the subtraction. Each successive term is alternately added or subtracted until after all the terms, the exact probability is obtained. However, this ignores the case where the first K flips are successes since these are not preceded by a failure. Your first term handles this case. It takes the probability of such a streak p^K and multiplies by a summation representing the probability that there are no streaks of K successes starting with a failure in the remaining N-K flips (since those are counted by the second term). This summation performs inclusion-exclusion just as the other one, except the first term is 1 since we are computing 1 minus the probability that we have any such streaks.

Here is an alternate derivation of the same closed form formula using generating functions:

https://arxiv.org/PS_cache/math/pdf/0511/0511652v1.pdf

There is a computational issue with this method. When finite precision arithmetic is used, numbers can be subtracted which differ from each other by an amount that is too small to be accurately represented, causing the result to be lost. For this reason, I generally use the recursion. This requires computing a greater number of terms, but the terms don’t require combinations. It’s only necessary to maintain K+1 results in memory at one time. Other possibilities include using a Markov matrix or using the generating function with a Fourier transform.

Pingback: Interesting derivation for the probability of at least K wins in a row – Nevin Manimala’s Blog

#Python:

def solveInteger(trials,run_length,faces,pos_faces):

if trials == run_length:

return pos_faces**run_length, faces**trials

elif trials < run_length: return 0, faces**trials

modulo = run_length + 1

neg_faces = faces – pos_faces

addthingy = [0 for x in range(modulo)]

addthingy[0] = pos_faces**run_length

addthingy[1] = addthingy[0]*neg_faces

posouts = addthingy[1] + addthingy[0]*faces

precalc_multiplier = pos_faces**run_length*neg_faces

i = 2

for x in range(run_length+2,trials+1):

if i == modulo:

i = 0

addthingy[i] = faces*addthingy[i-1] – precalc_multiplier*addthingy[i-modulo]

posouts = posouts*faces + addthingy[i]

i += 1

print(posouts/faces**trials)

N=10

K=4

solveInteger(N,K,2,1)

a very nice result that seem to have been missed, is to let

this is strictly speaking a lower bound, but it is actually a tight one and gives a very simple approximation to a messy problem. You can also easily see the qualitative nature of exponential decay in probability of no-run occurring, and the form is suggesting that an exponential distribution (or technically geometric for discrete time) is in some sense what is going on under the hood here.

It require a rather long detour into markov chains to justify this, though a nice result.

The idea for the recursion also works when the Bernoulli trials have non-identical probabilities.

If is the probability of trial i and

is the probability of trial i and  then

then

For a unweighted coin you could look at all possible outcomes and count the ones that have a streak and then divide by the total number of possible outcomes. For a weighted coin that favors head 2 out of 3 times you could create a representation of all outcomes by taking all the outcomes for a fair coin and duplicating each outcome for every head it contains. Then you can count the number of outcomes that have your streak and divide it by the total number of outcomes.