The original question from “A” was:

I’m reading Sean Carroll’s book Something Deeply Hidden, which is entirely about Many Worlds, and I’m following along for the most part, but there’s something I still don’t understand. […] Carroll considers an example in which an electron, passed through a magnetic field, has a 66% probability of being deflected upwards and a 33% probability of being deflected downwards as a result of its spin. […] if I fire one electron through the field, two universes will result, one with each outcome. Based on that one data point, I would have no ability to determine if the odds on my electron were 66-33, 50,50, 90-10, or any other distribution. I would need to fire, say, a hundred electrons in sequence and note down their outcomes. […] because this split is binary, it seems like over a hundred trials my results in almost all universes should converge towards a 50-50 distribution. A process which assigns unequal weights to two states doesn’t seem possible. Is this an example of oversimplification for a casual text?

The original question from “Laura” was:

I am reading Sean Carroll’s book Something Deeply Hidden and he finally answered a question I had, but now I am more confused.

My original question was about where the extra “stuff” (matter) is coming from if the Many Worlds interpretation is correct. If there is an infinite number of worlds, how are we conserving energy? This seems to go against the conservation of energy rule. I was excited to read Carroll’s answer to the question until I learned what it was: each world gets “thinner.” Gah!

Is this like the philosophy thought experiment: everything doubles in size every day, but so do all our measuring instruments so we will never know. This is unfalsifiable, so provides no real information/answer.

Carroll gives the example of a bowling ball world that, when it decoheres, becomes (as an example) two bowling ball worlds. Each bowling ball shares the original mass so they still add up to the total mass of the original bowling ball. Now do that infinity times- and I don’t get how we can get that thin and still exist. (Thought: Maybe this is how the multiverse ends.)

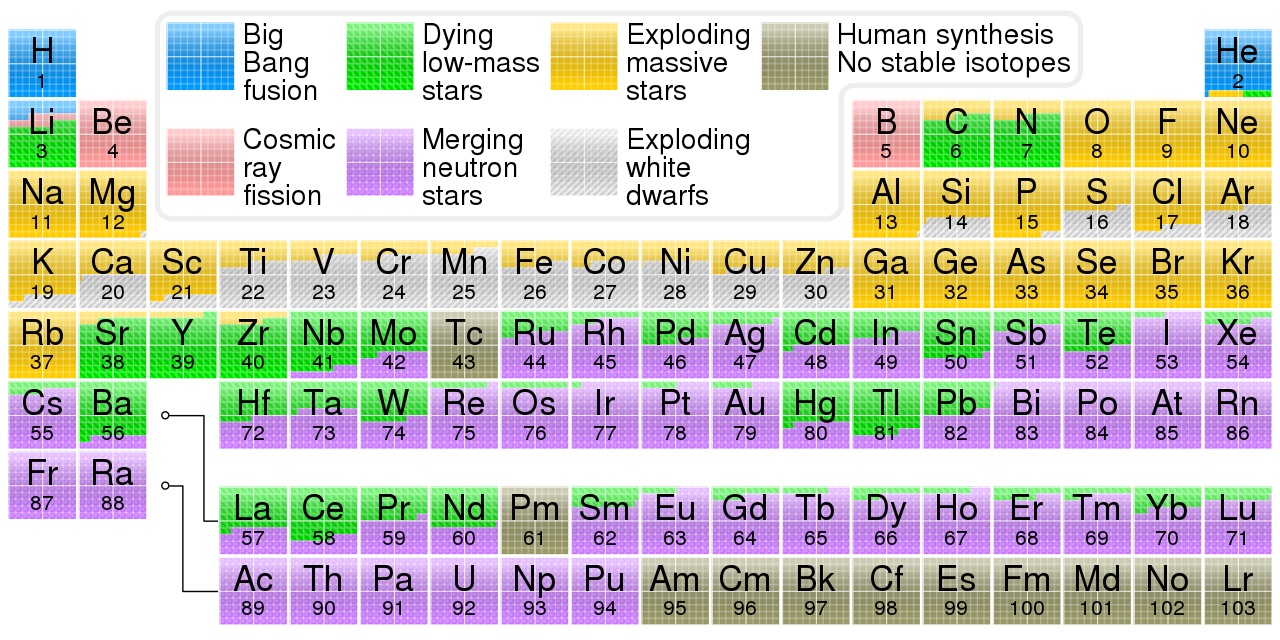

Physicist: When you watch a sci-fi series (almost any of them) at some point there’s an episode about parallel worlds, loosely justified by quantum mechanics, and ultimately just an excuse to get the actors to swap wigs. This is how most people are introduced to the Many Worlds Interpretation and, while not exactly wrong, there are some caveats to include in the episode credits.

First a little background. If you’re already familiar with what the Many Worlds Interpretation is and don’t (justifiably) feel that it’s completely crazy, feel free to skip down to “To actually answer the questions…“.

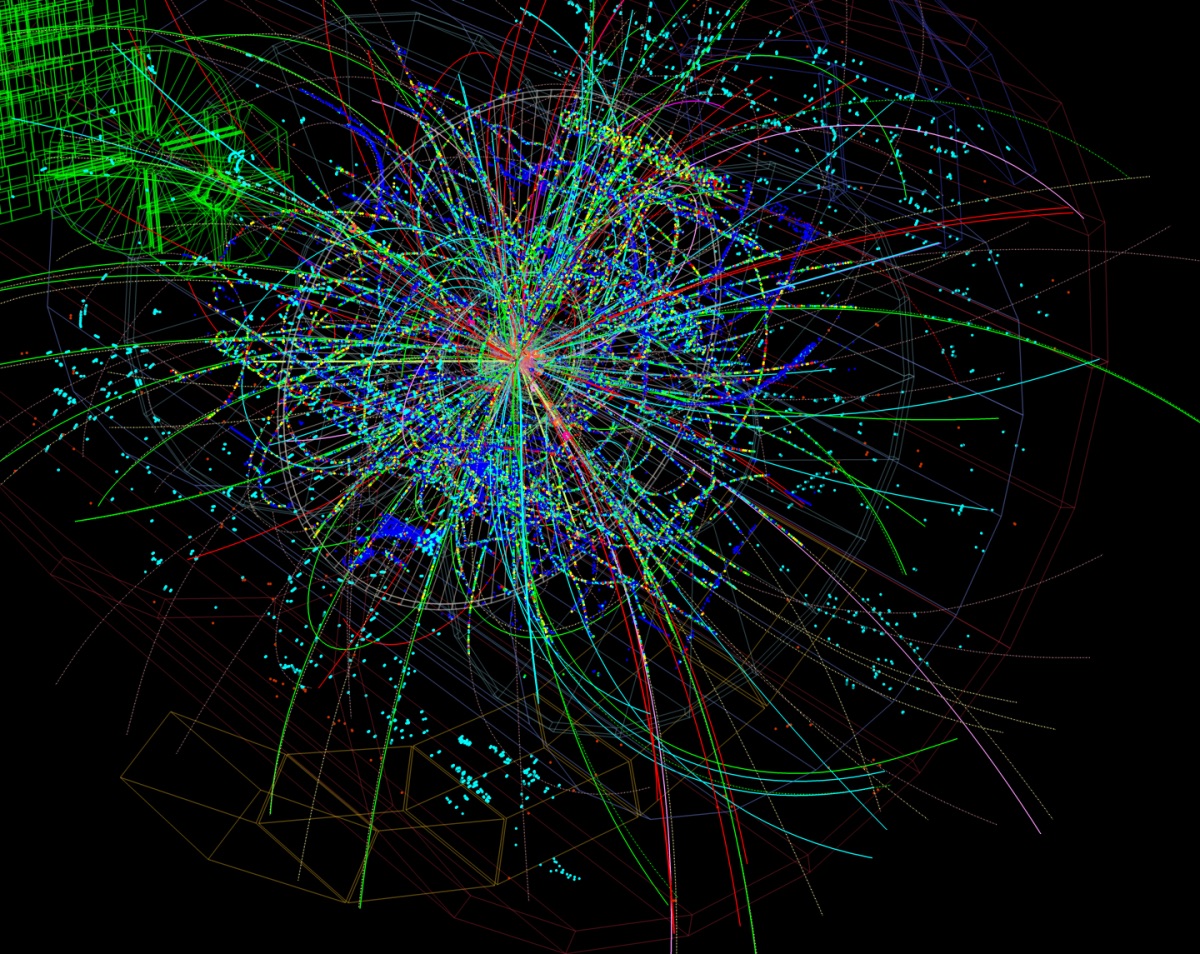

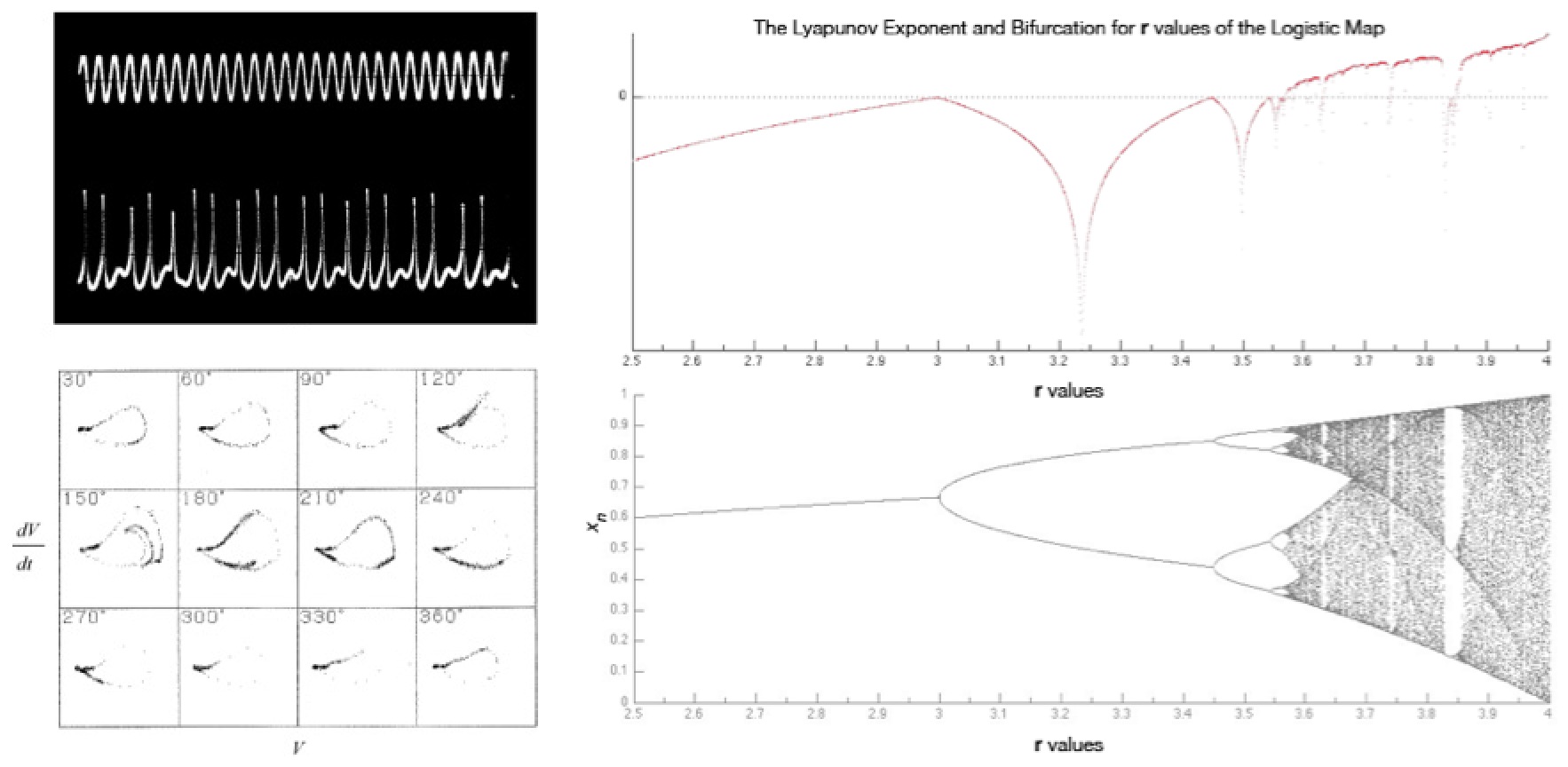

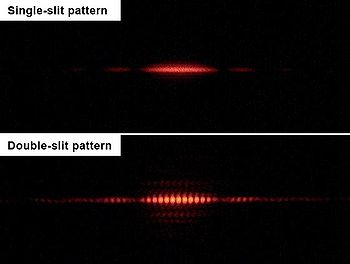

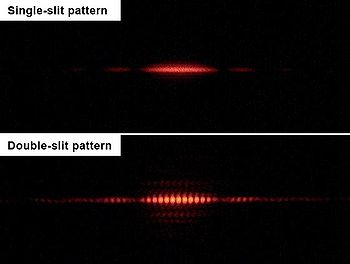

Quantum phenomena exhibit “superposition”, meaning that they are often in multiple states simultaneously. The first and one of the more jarring examples is Young’s double slit experiment. A barrier with two closely separated slits is placed between a coherent light source (like a laser or a pin point) and a projection screen. When light falls on the barrier we see a collection of bright and dark bands on the screen, instead of just a pair of bright bands (which is what you’d expect for two slits). This is completely normal and to be expected, because light is a wave and we know how waves work. In fact, when Young first did this experiment in 1801, he used it to accurately determine the wavelength of visible light for the first time. The double slits genuinely couldn’t be a cleaner, easier-to-predict, example of wave-like behavior.

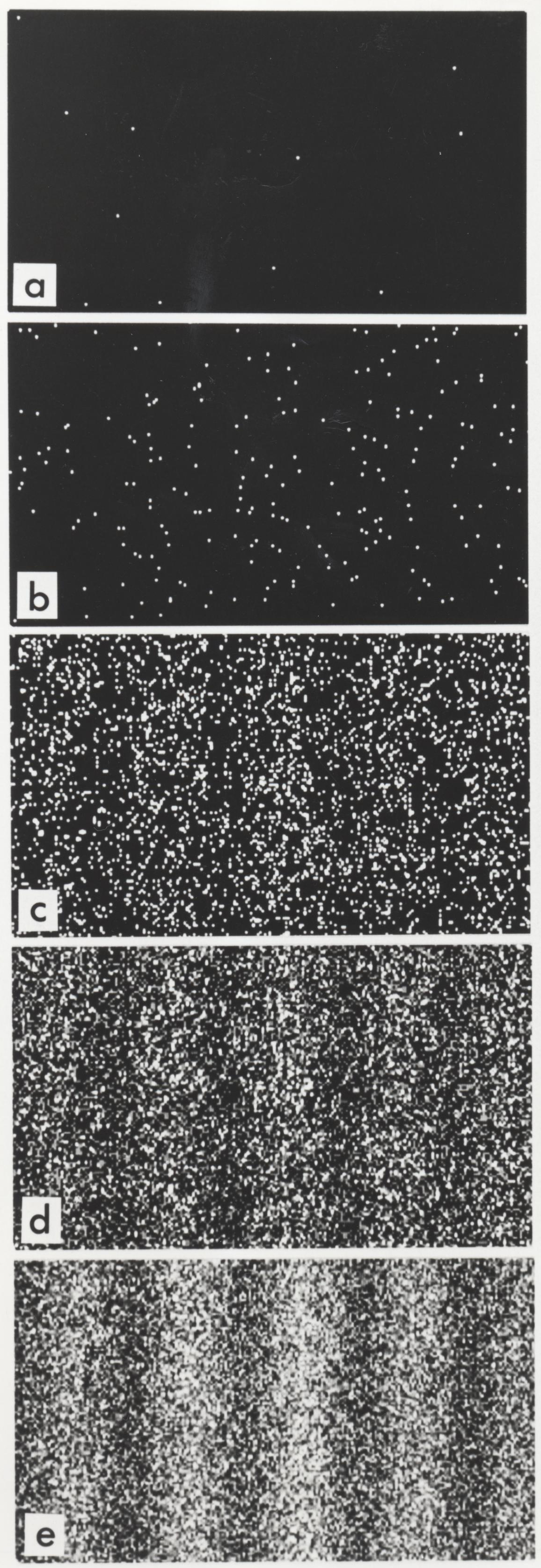

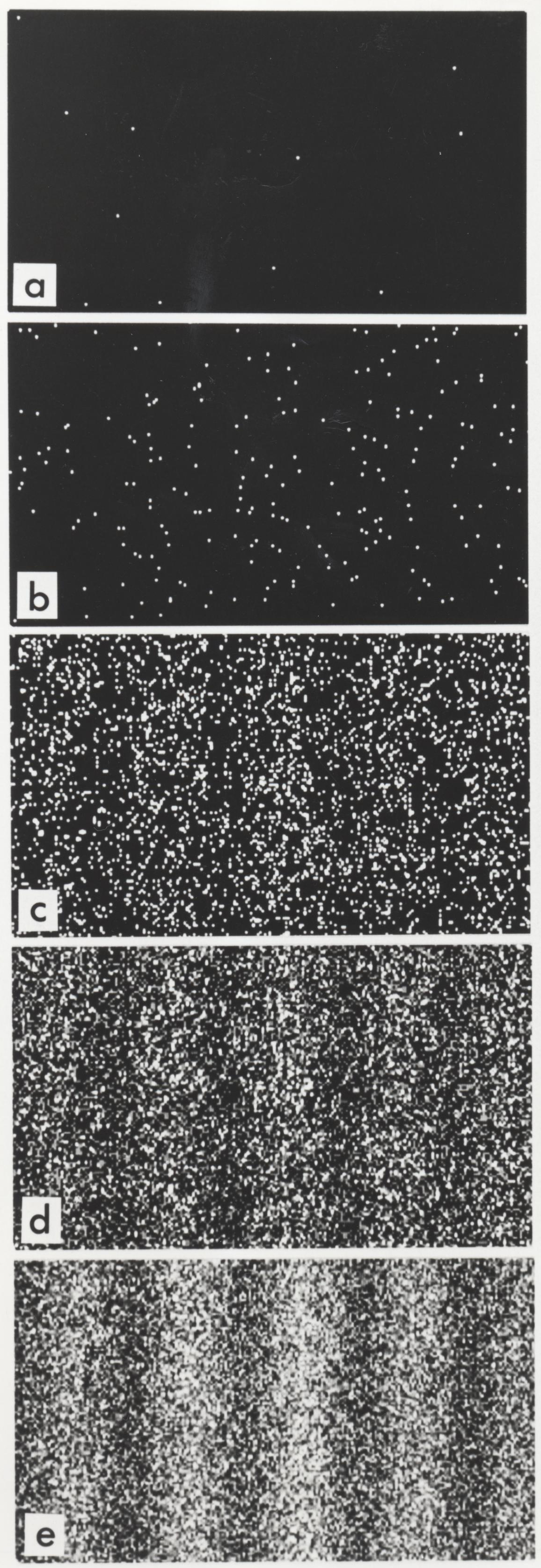

Send photons through the double slit barrier one at a time, carefully keeping track of where they end up, and you’ll find they still interfere like waves.

Repeat this exact experiment, but turn down the light so low that only one photon is released at a time and, as you’d expect, only one photon impacts the screen at a time. But terrifyingly, when we keep track of where these individual photons arrive, we find they line up perfectly with how intense the light was when it was turned on fully. Each photon interferes with itself, creating a pattern identical to that created by a wave going through both slits.

The double slit experiment, in a very non-unique, non-special way, shows that quantum phenomena have two different sets of behavior. When light is passing, undetected, through both slits we say that it’s “wavelike” and is in a “superposition” (it’s in multiple places/states). When the light impacts the screen and is detected we say that it’s “particlelike” and is in a “definite state”. “Wavelike” is an old term, and the one most likely to sound familiar, but the fact that a photon behaves specifically like a wave isn’t nearly as important as that fact that it managed to go through both slits.

Superposition is one of those things we absolutely cannot escape. Although there are plenty of disagreements at the fringe of quantum theory (as there ever should be), the idea of superposition is not one of them. It’s bedrock. It would be like competing climatologists arguing about whether rain falls up or down.

“Wave/particle duality”, the juxtaposition of superposition and single-position, is at the heart of the “measurement problem”, which asks why these two behaviors exist, what makes them different, and what gives rise to them. In particular, since every isolated system seems to be operate in superpositions, what is it about measurements that causes those many states to “collapse” into just one?

Making the measurement problem especially problematic, and pushing us closer to the realm of philosophy, is the fact that without exception every investigation into the limits of quantum phenomena has told us that there doesn’t seem to be one. We’ve established entanglement between continents, kept objects (barely) big enough to be seen in superpositions of mechanically vibrating states, performed the double slit experiment on molecules with thousands of atoms, … the list doesn’t end. The difficulty in demonstrating superposition isn’t a fundamental issue, like going faster than light, it’s an engineering issue, like going faster than sound. Large sizes, distances, and times make manipulating quantum systems much more difficult, but not impossible. Which forces some uncomfortable questions. Is there some inexplicable, never-observed “collapsing effect” that forces the world around us to actually be single-stated or, if superposition is the rule, why does the world seem to be single-stated?

There are a lot of theories about what causes collapse. Very broadly, they can be grouped into three schools of thought: “the Copenhagen Interpretation”, “the Many Worlds Interpretation”, and “shut up and calculate”. Adherents to Copenhagen insist that the macroscopic world we see around us really is in a single state and that collapse is a real, physical thing. Practitioners of the Many World Interpretation say that superposition is literally universal and we experience that by living separately in an effectively infinite set of “worlds”. And finally, for most physicists the measurement problem isn’t a problem. If you’re a geophysicist, delving philosophical questions about the weird quantum properties of the semiconductors in your equipment isn’t nearly as important as that equipment working. The “shut up and calculate” school asks and answers the question “Is there a problem?”.

The appeal of Copenhagen is that it feels right. Maybe tiny particles can be in superpositions, but at the end of the day you’re not. You’re you, not many yous. The problem with Copenhagen is a lot like the problem with geocentrism (the notion that the Earth sits still in the center of the universe). We can see lots of moons, including ours, orbiting exactly in accordance with Newton’s laws of gravity and motion. But the planets move in wildly swinging paths under the influence of inexplicable forces, while the Earth inexplicably sits still. That’s two very different systems floating around in a bunch of “somehow it works out”.

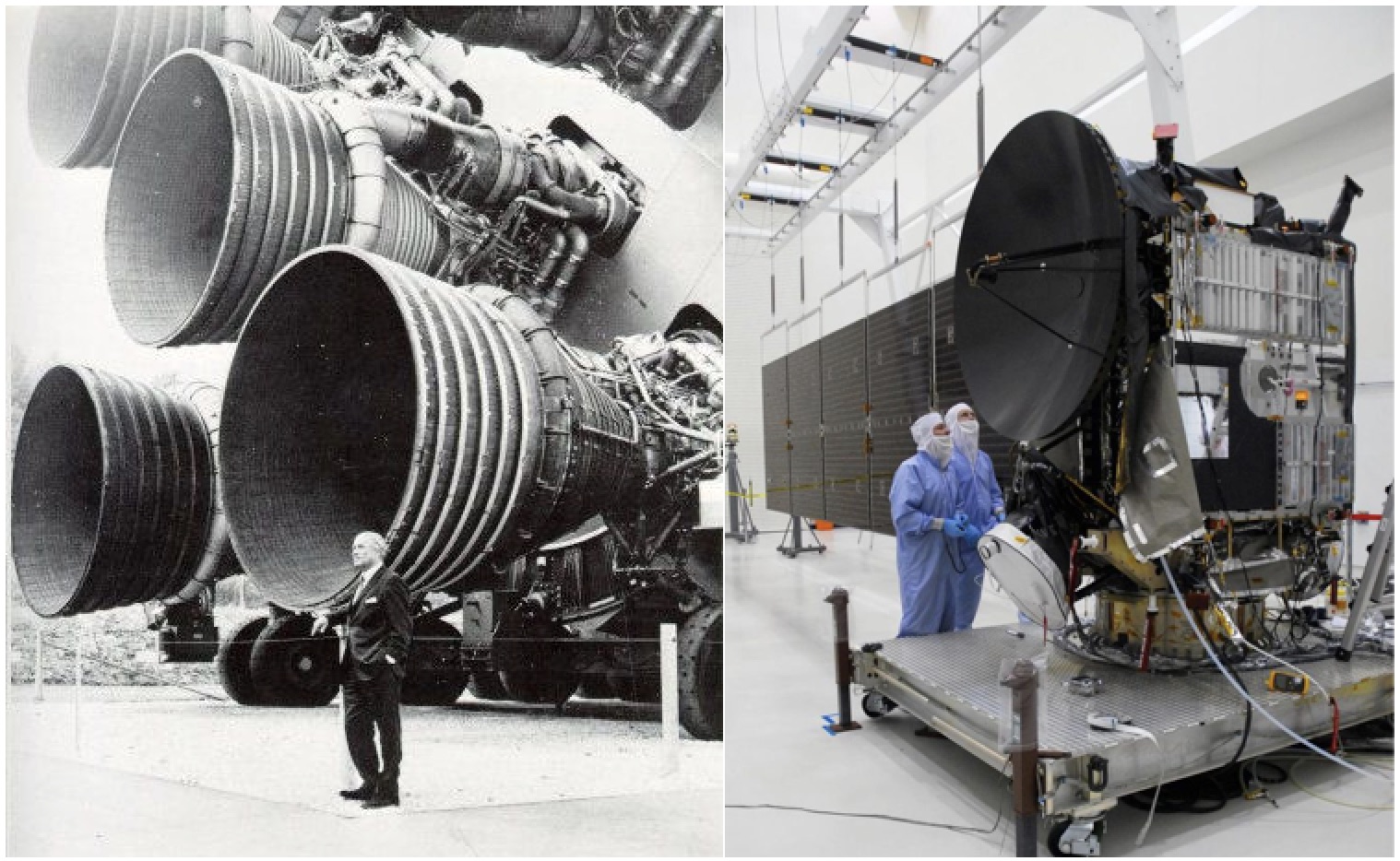

Left: The Galilean Moons of Jupiter are a great model of the solar system, orbiting in the same plane along elliptical paths. Right: Applying the same rules to everything in the solar system at large, we can explain why Mars jogs around the sky when it’s overhead near midnight without invoking any new rules or mysteries. The Earth is moving too.

Many Worlds is a bit like heliocentrism; everything is treated in exactly the same way under the exact same laws, with no extra inexplicable effects or special circumstances. The problem is that Many Worlds is so mind-bending that it shouldn’t be brought up in polite company. You don’t feel like you’re moving right now, because that’s how you should feel with the entire Earth moving right along with you. And you don’t feel like you’re in many states, because that’s how every state should feel. That takes a little unpacking.

In spite of the interference pattern produced by going through both, when we put a photo-detector behind each slit we find that each photon is caught going through only one. As far as (either version of) the photon is concerned, it went through a slit, arrived at a screen, and suddenly a bunch of physicists are scratching their chins and/or yelling at each other.

But why doesn’t the photon notice the “other photon”? An important property of quantum-ness is “linearity”, which means that the end result is always a clean sum of all of the contributing pieces. If you and a friend each roll dice and add up the results, the dice didn’t need to know about each other or have some kind of spooky connection, even though the ultimate result requires all of the dice. Similarly, the pattern on the screen is the sum of the contributions from the two slits, which means that the different versions didn’t need to work together or be aware of each other to create it. The stuff being added together can be positive, negative, or even complex-valued, which is why opening the second slit actually removes light to create dark fringes, but the important thing is linearity; one state doesn’t “know” about the other.

Ripples are a linear phenomena. The height of the water at any location is the sum of the ripples there, and yet despite working together in this way, the ripples ignore each other.

This is the idea behind the Many Worlds Interpretation: when a set of options presents itself, like going through one slit or the other, both options are taken and neither knows about the other. For all intents and purposes, the various states of a thing exist in their own worlds from their own point of view. That point of view is an important distinction; each version of each photon in the double slit experiment may say that they’re going through only one slit and that other versions must be in “other worlds”, but of course all of those versions are ultimately in the same room, contributing to the interference pattern we observe.

To really show how ridiculous superposition is, Schrödinger created the “Schrödinger’s Cat thought experiment”, wherein an unfortunate cat is placed in a box with a decent chance of being killed. Schrödinger describes how the cat can be put into a superposition of both alive and dead, like  , where by Born’s rule,

, where by Born’s rule,  indicates that each possibility has a one-half chance of being observed.

indicates that each possibility has a one-half chance of being observed.

Schrödinger then sagely points out that you’ll never see that superposition in practice, because when you open the box the cat will obviously always be one state or the other. This experiment (sans cat) is done whenever any measurement is done on a superposition of states and the single result recorded (which is frequently). So, given that superposition is a thing, this thought experiment forces us to ask “Why does observation cause collapse?”.

Not to be outdone, Wigner came up with his own thought experiment, “Wigner’s Friend” (Wigner claimed to have lots of friends, but for whatever reason, the one in his thought experiment never had a name). The cat, the box, and Wigner’s friend are all put into an even bigger box and the Schrödinger’s cat experiment is run again. But will the cat still collapse into a single state when observed? The Wigner’s Friend thought experiment forces us to ask “Does observation cause collapse?”

Excitingly, this was recently done (with particles, not cats and people) and the answer is a resounding “nope!”. If you can do a measurement entirely inside of an isolated system, then there’s no collapse; the measurer and measuree become “entangled”, but stay in a superposition of states. Before Wigner’s Friend opens the box, the Friend-and-Cat state is a superposition, something like  , and after the Friend opens the box, their entangled state is a superposition, like

, and after the Friend opens the box, their entangled state is a superposition, like  . Just like the experiment with just the cat, there’s a 50/50 chance of either pair of results, “relieved/alive” or “horrified/dead”. In the actual experiment the experimenters had a choice between interfering the states to show that they are in superposition or simply asking the Friend what they see.

. Just like the experiment with just the cat, there’s a 50/50 chance of either pair of results, “relieved/alive” or “horrified/dead”. In the actual experiment the experimenters had a choice between interfering the states to show that they are in superposition or simply asking the Friend what they see.

If you asked Wigner’s Friend what it felt like to be in a superposition of states, their answer would be “Um… normal?”. So although we can’t come close to putting entire people into a superposition of states, we can infer what it feels like. Each of the different versions of you in the superposition all feel like they’re in a single state, all have consistent personal histories, and all feel like they’re living completely normal, non-quantumy lives.

Point is, you already know exactly what it feels like to be in a superposition of states. Way to be!

To actually answer the questions we need to look at how Many Worlds is usually presented and compare it to a nice standard quantum experiment like, just for example, the double slit experiment. As bizarre as Many Worlds gets, it’s never about vast universes tearing apart, it’s about regular, dull-as-dishwater, superposition.

Say you needed to decide between a trip to the Moon or the bottom of the sea, but rather than flip a coin and go to one, you measure a quantum system (like Wigner’s Friend and the cat) so you can go to both. Many Worlds folk would say “the world split in two”, one for each result and excursion. Probability is a little hard to define in this situation, since the question “did you go to the Moon or the bottom of the sea” doesn’t have an overall answer, even though for each version it certainly seems to.

(By the way, I’m not advocating spending any money on it, but there is an app, “Universe Splitter”, that purports to measure a quantum system and report the results. In every way it is absolutely indistinguishable from a coin flip.)

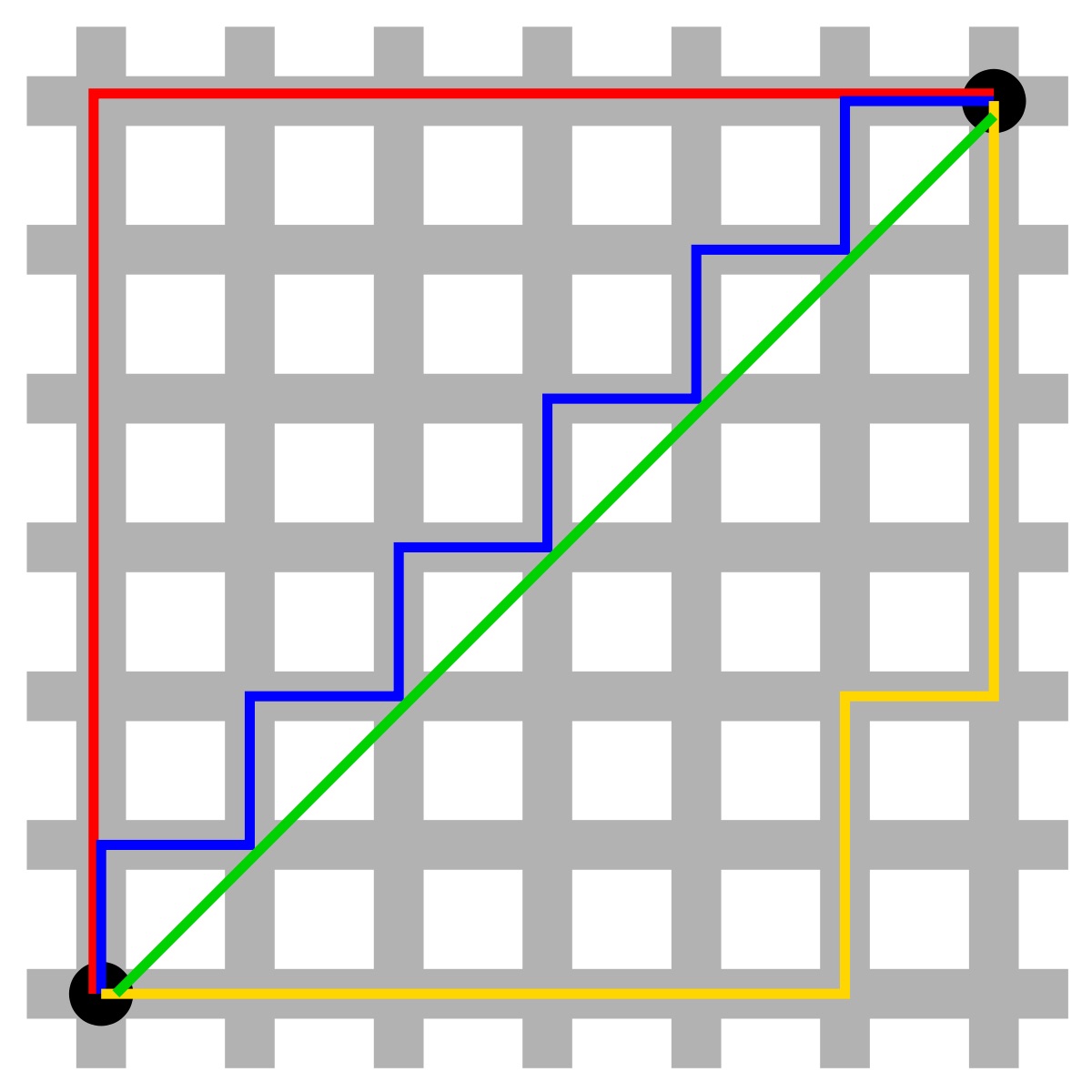

So, consider the double slit experiment. Even though each photon goes through both slits, you can easily make it so that they go through one more than the other. Nothing fancy, just put the light source closer to one slit than the other, or even better, make one slit wider. You’re more likely to see a photon coming out of the wider slit when you measure, and the wider slit will have a proportionately greater influence on the interference pattern. In this case you can easily model the skewed probability by considering many identical slits, where some of them happen to be squished together into a single slit. In fact, in practice that’s exactly what you have to do to accurately describe the double slit pattern.

The smaller pattern is produced by the double slit interference, but the larger “beats” of the single slit pattern are due to the non-zero width of the slits.

The important thing here is that the dichotomy, the “splitting”, isn’t a thing. Or at the very least, it isn’t what dictates probability. The mathematical formalism behind the quantum state describes it as “a vector in a complex vector space” (it’s a long list of complex numbers). Each number dictates the probability that a measurement will produce a particular result and whenever you have to combine two or more causes of an event, these complex numbers are what you add together. In a very direct sense, the “quantum state vector” describes reality, what is and what isn’t. There are arguments, halfway decent arguments, that try to justify why the quantum state vector and probabilities are related through the Born rule (taking the square magnitude of the complex value to find the probability), but ultimately this should be treated as an axiom. It’s true in as much as it works perfectly at all times in every situation that it has ever been tested, but it’s merely assumed to be true because we can’t derive it from simpler things.

When we make measurements (quantum mechanically or not) we gain information and with that information we can change our “priors”, the things we used to determine probabilities. For example, say you’ve rolled and covered a die. The probability of any result is 1/6, so listing off the “probability distribution” for all six results is {1/6, 1/6, 1/6, 1/6, 1/6, 1/6}. Now say you’ve learned that the result was odd. All of the even possibilities disappear, but the odds are affected too: {1/3, 0, 1/3, 0, 1/3, 0}. The distribution must always be “normalized”, meaning that the probabilities always sum to one. This is how all probabilities are defined; not as “a true probability” but as “a probability given that…”.

Now say that the experiment is repeated with a quantum die. The experience is exactly the same, but now every result happens. The probabilities in the “no evens” and “no odds” worlds are {1/3, 0, 1/3, 0, 1/3, 0} and {0, 1/3, 0, 1/3, 0, 1/3}. On the one hand you could say that the “worlds” are getting spread thinner, but in reality nothing is appreciably different before or after a measurement. The Sun still rises, Rush still rules, and probabilities still sum to one. You could role dice for months, getting strings of results within minutes never before seen, and the world won’t be any different than it was before you started (assuming you can get your job back and explain to your friends and loved-ones why you disappeared for months). The results that don’t come up instantly become irrelevant as you re-normalize on move on.

A rare picture of a Yahtzee player not destroying the universe. The rolls of the dice before don’t “thin out probability” on the rolls to come, and measurements don’t “thin out” the universe in exactly the same way.

Evidently energy and existence in general is the same way: perpetually normalized. If not then you should be able to tell the difference pretty quick. Say you want to shoot two targets, but only have one bullet. You could flip a coin and shoot one, but why not measure a quantum system so you can do both instead? If energy had to be split between universes, then you’d find that you don’t hit either target because your bullet is suddenly half-dud.

A good way to gain some insight into this, you’ll be shocked to learn, is to consider the double slit experiment. Release one photon with X energy and exactly X energy will impact the screen. We say that the photon goes through both slits and so it makes sense to say that the photon’s energy is spread out, with X/2 going through each slit. However, superpositions can never be directly observed. In the double slit experiment we infer that the photon goes through both slits based on the interference pattern. That doesn’t mean each slit gets half a photon (photons can’t be divided), it means that this system is in a superposition of both slits having an entire photon. This is re-normalization in the act; when you measure where you expect there to be “some photon” you either find one entire photon or the photon is somewhere else. Even when we’re busy inferring things about the photon, we’re always talking about one, full photon.

Similarly, when we make ourselves part of the quantum system and worry about other versions of ourselves with different perspectives, we infer that those other “worlds” will also be accessing the same energy we do. And in some sense they are. But just like probabilities or entire photons, that energy doesn’t get watered down. The system as a whole is in a superposition of states where all of the energy is present. Every measurement produces a result where the exact same amount of energy exists before and after.

It’s cheap to say “that’s how the math works”, but this is bedrock stuff and there’s no digging down from here. If you’ve gotten this far and you still don’t feel comfortable with what exactly a superposition is, that’s good. Feynman used to get bent out of shape when anyone asked him “whys” and “whats” about fundamental things. With regard to the weird behavior of quantum systems and why we immediately turn to the math, he said

“There isn’t any word for it, if I say they behave like a particle, it’ll give the wrong impression. If I say they behave like waves… They behave in their own inimitable way. Which technically could be called `the quantum mechanical way.’ They behave in a way that is like nothing you have ever seen before. Your experience with things you have seen before is inadequate, is incomplete. […] Well, there’s one simplification at least: electrons behave exactly the same in this respect as photons. That is, they’re both screwy, but in exactly the same way.” -Richard “Knuckles” Feynman

That simplification, by the way, is the fact that you can use quantum state vectors, superpositions, Born’s rule, and the rest of the standard quantum formalism for literally everything. Small blessings. Since Feynman already came up, he also has a stance on energy that is simultaneously enlightening and disheartening, but may shed a little light on why you shouldn’t be too worried about it getting “thinned out”.

“There is a fact, or if you wish, a law governing all natural phenomena that are known to date. There is no known exception to this law – it is exact so far as we know. The law is called the conservation of energy. It states that there is a certain quantity, which we call “energy,” that does not change in the manifold changes that nature undergoes. That is a most abstract idea, because it is a mathematical principle; it says there is a numerical quantity which does not change when something happens. It is not a description of a mechanism, or anything concrete; it is a strange fact that when we calculate some number and when we finish watching nature go through her tricks and calculate the number again, it is the same. (Something like a bishop on a red square, and after a number of moves – details unknown – it is still on some red square. It is a law of this nature.) […] It is important to realize that in physics today, we have no knowledge of what energy ‘is’. We do not have a picture that energy comes in little blobs of a definite amount. It is not that way. It is an abstract thing in that it does not tell us the mechanism or the reason for the various formulas.” -Richard “Once Again” Feynman

Ultimately, the Many Worlds Interpretation does yield some useful intuition. It gives us a way to imagine a larger world where we exist in superpositions of states. We can ponder spiraling what if’s about the other versions of us and our world. But at the same time, it’s not entirely accurate and it does lead to some misleading intuition. In particular, about the universe “splitting” or even being in many pieces at all.

Very, very frustratingly, without declaring a measurement scheme in advance, you can’t even talk about quantum systems being in any particular set of states. For example, a circularly polarized photon can be described as some combination of vertical and horizontal states, so there’s your two worlds, or it can described as a combination of the two diagonal states, so there’s your… also two worlds. This photon is free to be in multiple states in multiple ways or even be in a definite state, depending on how you’d like to interact with it. For the world to properly “split” a distinction must be made about how the photon is to be measured, but that isn’t something intrinsic to either the photon or the universe.

In some sense, the Many Worlds interpretation is, at best, an imaginative way to roughly catalog the results of particular measurement results and not a good way to talk about the universe at large. For that, I humble advocate “relational quantum mechanics” where the system in question, the observer, and the universe at large are all assumed to be quantum systems and how they evolve is dictated by how they interact (and what measurements they use).