The original question was: Recently I’ve come across a task to calculate the probability that a run of at least K successes occurs in a series of N (K≤N) Bernoulli trials (weighted coin flips), i.e. “what’s the probability that in 50 coin tosses one has a streak of 20 heads?”. This turned out to be a very difficult question and the best answer I found was a couple of approximations.

So my question to a mathematician is: “Why is this task so difficult compared e.g. to finding the probability of getting 20 heads in 50 coin tosses solved easily using binomial formula? How is this task in fact solved – is there an exact analytic solution? What are the main (preferably simplest) approximations and why are they used instead of an analytic solution?”

Physicist: What follows was not obvious. It was the result of several false starts. It’s impressive in the same sense that a dude with seriously bruised legs, who can find anything in his house with the lights off, is also impressive. If you’re looking for the discussion of the gross philosophy, and not the detailed math, skip down to where you find the word “Jabberwocky” in bold. If you want specific answers for fixed, small numbers of coins, or you want sample computer code for calculating the answer, go to the bottom of the article.

The short answer: the probability, S, of getting K or more heads in a row in N independent attempts (where p is the probability of heads and q=1-p is the probability of tails) is:

Note that here is the choose function (also called the binomial coefficients) and we are applying a non-standard convention that

for

which makes the seemingly infinite sums always have only a finite number of terms. In fact, for N and K fixed, the answer is a polynomial with respect to the variable p.

Originally this was a pure math question that didn’t seem interesting to a larger audience, but we both worked for long enough on it that it seems a shame to let it go to waste. Plus, it gives me a chance to show how sloppy (physics type) mathematics is better than exact (math type) mathematics.

Define {Xi}j as the list of results of the first j trials. e.g., if j=4, then {Xi}j might be “{Xi}4=HTHH” or “{Xi}4=TTTH” or something like that, where H=heads and T=tails. In the second case, X1=T, X2=T, X3=T, and X4=H.

Define “Ej” as the event that there is a run of K successes (heads) in the first j trials. The question boils down to finding P(EN).

Define “Aj” as the event that the last K terms of {Xi}j are T followed by K-1 H’s ({Xi}j = X1X2X3X4…THHH…HHH). That is to say, if the next coin (Xj+1) is heads, then you’ve got a run of K.

Finally, define p = P(H) and q = P(T) = 1-p. Keep in mind that a “bar” over an event means “not”. So “” reads “not heads equals tails”

The probability of an event is the sum of the probabilities of the different (disjoint) ways that event can happen. So:

iv) comes from the fact that . If you have a run of K heads in the first j trials, of course you’ll have a run in the first j+1 trials. v) The zero comes from the fact that if the first j terms don’t have a run of K heads and the last K-1 terms are not all heads, then it doesn’t matter what the j+1 coin is, you can’t have a run of K heads (you can’t have the event Ej+1 and not Ej and not Aj). vii) is because if there is no run of K heads in the first j trials, but the last K-1 of those j trials are all heads, then the chance that there will be a run of K in the first j+1 trials is just the chance that the next trial comes up heads, which is p. ix) the chance of the last K trials being a tails followed by K-1 heads is qpK-1. x) If the last K (of j) trials are a tails followed by K-1 heads, then whether a run of K heads does or doesn’t happen is determined in the first j-K trials.

The other steps are all probability identities (specifically and Bayes’ theorem:

).

Rewriting this with some N’s instead of j’s, we’ve got a kick-ass recursion:

And just to clean up the notation, define S(N,K) as the probability of getting a string of K heads in N trials (up until now this was P(EN)).

S(N,K)=S(N-1,K)+qpK-qpKS(N-K-1,K). We can quickly figure out two special cases: S(K,K) = pK, and S(l,K) = 0 when l<K, and that there’s no way of getting K heads without flipping at least K coins. Now check it:

ii) Plug the equation for S(N,K) in for S(N-1,K). iii-vi) is the same thing. vii) write the pattern as a sum. viii) the terms up to K-1 are all zero, so drop them.

Holy crap! A newer, even better recursion! It seems best to plug it back into itself!

You can keep plugging the “newer recursion” back in again and again (it’s a recursion after all). Using the fact that and

you can carry out the process a couple more times, and you’ll find that:

There’s your answer.

In the approximate (useful) case:

Assume that N is pretty big compared to K. A string of heads (that can be zero heads long) starts with a tails, and there should be about Nq of those. The probability of a particular string of heads being at least K long is pk so you can expect that there should be around E=Nqpk strings of heads at least K long. When E≥1, that means that it’s pretty likely that there’s at least one run of K heads. When E<1, E=Nqpk is approximately equal to the chance of a run of at least K showing up.

Jabberwocky: And that’s why exact solutions are stupid.

Mathematician: Want an exact answer without all the hard work and really nasty formulas? Computers were invented for a reason, people.

We want to compute S(N,K), the probability of getting K or more heads in a row out of N independent coin flips (when there is a probability p of each head occurring and a probability of 1-p of each tail occurring). Let’s consider different ways that we could get K heads in a row. One way to do it would be to have our first K coin flips all be heads, and this occurs with a probability . If this does not happen, then at least one tail must occur within the first K coin flips. Let’s suppose that j is the position of the first tail, and by assumption it satisfies

. Then, the probability of having K or more heads in a row in the entire set of coins (given that the first tail occurred at

) is simply the probability of having K or more heads in a row in the set of coins following the jth coin (since there can’t be a streak of K or more heads starting before the jth coin due to j being smaller or equal to K). But this probability of having a streak of K or more after the jth coin is just S(N-j,K). Now, since the probability that our first tail occurs at position j is the chance that we get j-1 heads followed by one tail, so it is

. That means that the chance that the first tail occurs on coin j AND there is a streak of K or more heads is given by

. Hence, the probability that the first K coins are all heads, OR coin one is the first tails and the remainder have K or more heads in a row, OR coin two is the first tails and the remainder have K or more heads in a row, OR coin three is the first tails and…, is given by:

Note that what this allows us to do is to compute S(N,K) by knowing the values of S(N-j,K) for . Hence, this is a recursive formula for S(N,K) which relates harder solutions (with larger N values) to easier solutions (with smaller N values). These easier solutions can then be computed using even easier solutions, until we get to S(A,B) for values of A and B so small that we already know the answer (i.e. S(A,B) is very easy to calculate by hand). These are known as our base cases. In particular, we observe that if we have zero coins then there is a zero probability of getting any positive number of heads is zero, so S(0,K) = 0, and the chance of getting more heads than we have coins is zero, so S(N,K) = 0 for K>N.

All of this can be implemented in a few lines of (python) computer code as follows:

An important aspect of this code is that every time a value of S(N,K) is computed, it is saved so that if we need to compute S(N,K) again later it won’t take much work. This is essential for efficiency since each S(N,K) is computed using S(N-j,K) for each j with and therefore if we don’t save our prior results there will be a great many redundant calculations.

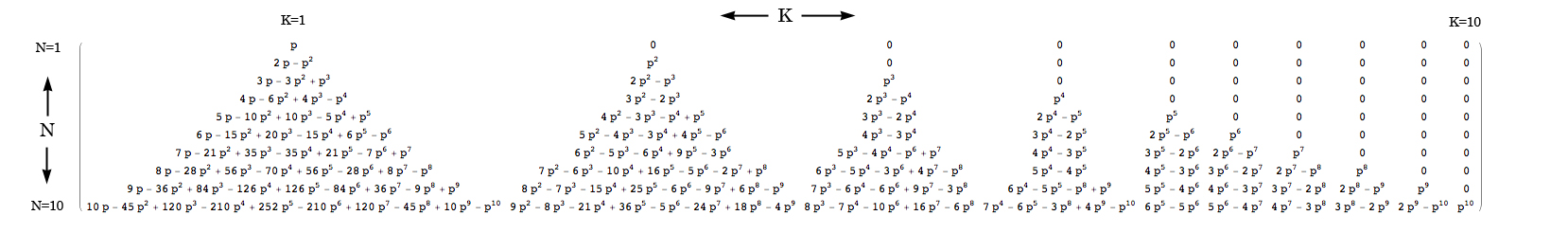

For your convenience, here is a table of solutions for S(N,K) for and

(click to see it enlarged):

![Probability S[N,K] of a streak of K or more out of N independent coin flips.](https://www.askamathematician.com/wp-content/uploads/2010/07/ProbOfStreak.jpg)

Hello! And thank You very much for your efforts and this explanation!

I need to go a bit further and get stuck in a way.. The problem is to find an expected value E for the number of “runs of K or more successes (heads) in a row in N Bernoulli trials (coin flips)”, i.e. the average number of such runs in N trials for specified p and q=1-p.

Thanks to anyone for any help in this question! Hope there is also an elegant solution for it!

Let P(j, i) be the probability of j or more runs of K or more successes in i flips.

Then E is the sum of P(j, N) for j = 1, 2, 3, … floor((N+1)/(K+1)).

We may compute P(j, i) recursively as follows:

P(j, i) = P(j, i-1) + [P(j-1, i-K-1) – P(j, i-K-1)] * qp^K,

for i > j*(K+1) – 1

P(j, i) = p^(jK) * q^(j-1),

for i = j*(K+1) – 1

P(j, i) = 0,

for i < j*(K+1) – 1

P(0, i-K-1) = 1

Not sure how elegant that is, but I wrote a program to compute these this way years ago and verified it by simulation. I have not attempted to solve this 2-dimensional recursion in closed form. Let us know if you do. Excel spreadsheets that incorporate this calculation can be downloaded here:

https://www.daytradinglife.com/wp-content/downloads/stmm-pdfs/multiple-losing-streak-probability-calculator.pdf

Let P(j, i) be the probability of getting j or more streaks of K or more successes in i flips.

Then E is the sum of P(j, N) for j = 1, 2, 3, … floor((N+1)/(K+1)).

P(j, i) can be computed recursively as follows:

P(j, i) = P(j, i-1) + [P(j-1, i-K-1) – P(j, i-K-1)] * qp^K, for i > j*(K+1) – 1

P(j, i) = p^(jK) * q^(j-1), for i = j*(K+1) – 1

P(j, i) = 0, for i < j*(K+1) – 1

P(0, i) = 1.

Note that the special case j=1 provides a solution to the original problem, and unlike the recursion given by Mathematician, each probability is computed from only 2 previously computed probabilities rather than K. However, the preceding K terms must still be maintained in memory.

I don't know if that qualifies as elegant, but I wrote a program to do this years ago and verified it by simulation. I haven't attempted to solve the 2-dimensional recursion. Let us know if you do.

Consider the related question of counting how many sequences of n coin flips contain no streaks of k or more heads. We can count this algorithmically by starting with a sequence of size 1 and keeping track of the counts for sequences ending in 0 through k-1 heads, then generating the next set of counts by considering what happens if the next coin is a heads or tails respectively. It can be seen through this formulation that the sequence has a nice property, that is the result follows a fibonacci-like recurrence:

f(1..k-1) = 2^k

f(k) = 2^k – 1

f(n) = f(n-1) + f(n-2) … f(n-k)

The characteristic polynomial looks like x^k – x^(k-1) – x^(k-2) … – x – 1

The root of this polynomial can be used to approximate the value of the sequence similar to the goldren ratio and the fibonacci sequence as n approaches infinity, it will be order of (polynomial root)^n.

As k goes to infinity, the polynomial root will approach 2. This is expected because the number of coin sequences total is 2^n, so the number of sequences with no streaks of k or more cannot exceed that value. It will get arbitrarily close to 2 because as n goes to infinity, regardless of the streak size there is bound to be a streak somewhere as the sequence gets large enough.

Hope this was helpful.