The original question was: It seems to me that it’s impossible to measure the information content of a message without considering the recipient of the message. For example, one might say that a coin toss generates a single bit of information: the result is either heads or tails. I beg to differ. Someone who already knows that a coin would be tossed, only gains a single bit when he or she hears, “The quarter landed on heads.” But someone else who didn’t know a coin was being tossed learns not only that the quarter landed on heads, but also that the quarter was tossed. One could also deduce the speaker’s nationality or what sort of emotional response the coin toss has elicited. If you’re creative enough, there’s no limit to how much information you squeeze out of this supposedly one-bit sentence. Is it really possible to objectively quantify the amount of information a sentence contains?

Physicist: Information theory (like any theory) is unrealistically simple. By expanding the complexity of a model you’ll find that, in general, you can glean more information. However, before you jump into figuring out how much information that a coin flip could theoretically convey you start with the simple case: heads and tails. Then you can get into more complicated ideas like what kind of information can be conveyed with the fact of the coin’s flip, or its year of issue, or what kind of person would bother telling anybody else about their coin hobby.

In an extremely inexact, but still kinda accurate nutshell: the information of a sentence can be measured by how much you’re surprised by it. The exact definition used by information theorists can be found in Claude Shannon’s original paper (if you know what a logarithm is, you can read the paper). Even more readable is “Cover and Thomas“.

Unfortunately, there is no completely objective way to quantify exactly how much information a sentence contains. For example, each sentence in this post has substantially lower entropy (information) for an English language speaker than for a Russian language _____, since after each ____ there are relatively few other words than can reasonably follow, if you adhere to the grammatical rules and spelling __ English (this would be a _____ example if I could write ____). For the Russian speaker every next word or letter is far less predictable.

If, a thousand years ago, somebody had said “To be or…” the next words would be pretty surprising. Everyone listening would be expecting something like “…three bee or something like that, I mean I started running the second I saw the hive”, and would be surprised by the actual end of the sentence. However, today people know the whole quote (“…not to be.”), so you could say that the sentence contains less information, because most people can predict more of the words in it.

The difficulty comes about because information is defined and couched in the mathematics of probability, and how much new information a signal brings you is based on conditional probability (which is what you’re using when you say something like “the chance of blah given blerg”). So the amount of information you get from something is literally conditioned on what you already know. So for example, if you send someone the same message twice, they won’t get twice the information.

Real problems crop up when you don’t know what information the other person already has. Not speaking the same language is a common example of this. But if you have absolutely no “conditions” (things like; a coin is being flipped, written language exists, whatever), then there’s no way to send, or really even define, information.

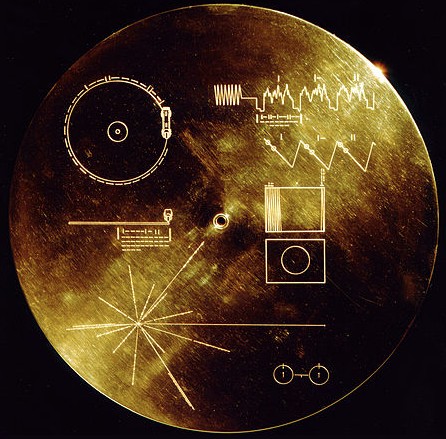

This brings up buckets of questions about how babies learn anything. You could also argue that this is why the cover of the Voyager Golden Record ended up making no damn sense.

In case you don’t have the proper previous (and subjective) information to immediately understand what this plate is trying to say, here’s the cheat sheet.

Even so, you can still gauge how much information is being conveyed with pretty minimal conditioning, like “all the information here is contained in this string of symbols”. In fact, you can determine if a string of unknown symbols is a language, or even what language (given a large enough sample)!

You messed up the last link there – it points to https://www.askamathematician.com/www.cs.washington.edu/homes/rao/ScienceIndus.pdf

Fixed.

Thanks!

Yes, the amount of information a sentence contains can be objectively stated. However, in order to do so, we must ignore subjective interpretation. for example, a sentence of ones and zeros, 8 digits long, can contain 256 unique combinations that, at best, can answer eight unique yes or no questions. It can never contain more information than that.

In reality, the only difference between the person who knew the coin toss was coming and the one who didn’t was that one person new the value of that “virtual” bit of information before hand, As such, they may be tempted to think that wasn’t information at all. However, not being surprised by information doesn’t change the fact that it is still information. More importantly, is that the extraneous information gained externally from the coin toss or message receipt is, in a sense, it’s own unique sentence with it’s own set of yes and no questions. More than that is the realization that the message itself and the circumstances of the message are independent of each other. In other words, sure, we may learn that the speaker has a British accent, but the speaker doesn’t have a British accent because the coin toss was heads, or a Russian accent because the coin toss was tails. The two sets of information are unique to each other.