Physicist: This is a question that comes up a lot when you’re first studying linear algebra. The determinant has a lot of tremendously useful properties, but it’s a weird operation. You start with a matrix, take one number from every column and multiply them together, then do that in every possible combination, and half of the time you subtract, and there doesn’t seem to be any rhyme or reason why. This particular math post will be a little math heavy.

If you have a matrix, , then the determinant is

, where

is a rearrangement of the numbers 1 through n, and

is the “signature” or “parity” of that arrangement. The signature is (-1)k, where k is the number of times that pairs of numbers in

have to be switched to get to

.

For example, if , then

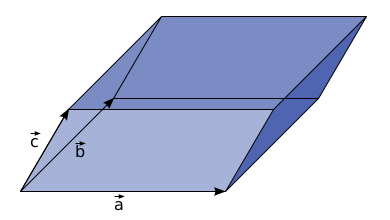

Turns out (and this is the answer to the question) that the determinant of a matrix can be thought of as the volume of the parallelepiped created by the vectors that are columns of that matrix. In the last example, these vectors are ,

, and

.

Say the volume of the parallelepiped created by is given by

. Here come some properties:

1) , if any pair of the vectors are the same, because that corresponds to the parallelepiped being flat.

2) , which is just a fancy math way of saying that doubling the length of any of the sides doubles the volume. This also means that the determinant is linear (in each column).

3) , which means “linear”. This works the same for all of the vectors in

.

Check this out! By using these properties we can see that switching two vectors in the determinant swaps the sign.

4) , so switching two of the vectors flips the sign. This is true for any pair of vectors in D. Another way to think about this property is to say that when you exchange two directions you turn the parallelepiped inside-out.

Finally, if ,

, …

, then

5) , because a 1 by 1 by 1 by … box has a volume of 1.

Also notice that, for example,

Finally, with all of that math in place,

Doing the same thing to the second part of D,

The same thing can be done to all of the vectors in D. But rather than writing n different summations we can write, , where every term in

runs from 1 to n.

When the that are left in D are the same, then D=0. This means that the only non-zero terms left in the summation are rearrangements, where the elements of

are each a number from 1 to n, with no repeats.

All but one of the will be in a weird order. Switching the order in D can flip sign, and this sign is given by the signature,

. So,

, where

, where k is the number of times that the e’s have to be switched to get to

.

So,

Which is exactly the definition of the determinant! The other uses for the determinant, from finding eigenvectors and eigenvalues, to determining if a set of vectors are linearly independent or not, to handling the coordinates in complicated integrals, all come from defining the determinant as the volume of the parallelepiped created from the columns of the matrix. It’s just not always exactly obvious how.

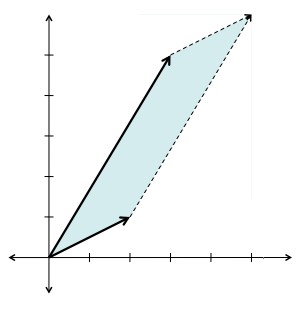

For example: The determinant of the matrix is the same as the area of this parallelogram, by definition.

Using the tricks defined in the post:

Or, using the usual determinant-finding-technique, .

Many thanks for the posting, you certainly do a great job explaining key mathematical concepts to people! And in a relaxed round-about way!

What does swapping two of the vectors correspond to in the geometric interpretation? If I put all the vectors with their tail at the origin, the order in which I put them there shouldn’t make any difference in the volume of the parallelpiped formed. It wouldn’t turn it inside-out.

The idea of a “negative volume” is important for linearity to make sense. That is, if , and

, and  can be negative, then the volume must have the option of being negative (being “inside out”).

can be negative, then the volume must have the option of being negative (being “inside out”).

Swapping vectors is the same as a reflection over the plane between them. For example, switching the x and y coordinates is exactly the same as reflecting over the line/plane x=y.

The positiveness/negativeness is described by parity, which (weirdly) does depend on the order of the vectors.

Sorry that my question has nothing to do with algebra, but the following link is quite interesting to me. I wonder if it is theory or fantasy? Perhaps you might give me your opinion and thoughts on this 3 part hypothetical? Thanks

What is the mathematician up to these days? I haven’t read anything by him in quite a while.

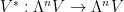

I think it’s worth pointing out that there’s a nice, clean, coordinate-free way to understand the determinant. If is a real vector space, the top exterior power is a one-dimensional real vector space

is a real vector space, the top exterior power is a one-dimensional real vector space  . A self-map

. A self-map  induces a map

induces a map  . Since

. Since  is a one-dimensional real vector space,

is a one-dimensional real vector space,  is multiplication by some real number. That number is (you guessed it) the determinant of

is multiplication by some real number. That number is (you guessed it) the determinant of  .

.

Pingback: Carnival of Mathematics #99 « Wild About Math!

A good reference for understanding the patterns of the determinant come from Geometric Algebra, (See Linear and Geometric Algebra by Macdonald). In essence, it’s the outer product of all of the vectors of the matrix.

In the first figure, is the a[n,1] intended to appear in M twice? It appears to be a copy paste error.

It is definitely not supposed to appear twice. Fixed!

Pingback: TWSB: We’re In the Matrix | Eigenblogger

It was a nice explanation. But you mostly explained based on a 3X3 matrix! I have a few questions.

1)What is the physical significance of 4X4 and higher matrices? What is the use/any physical examples for 4×4 and higher order matrices?

2)Is there any physical significance for “Non Square” matrices?

3)What is the physical meaning of “Rank of a Matrix”?

Great article, thanks! I truly appreciate that you explain where the idea could come from.

I really liked your way of thinking about negative volume (when one additionaly heard about base orientation and that ||two bases give the same sign of determinant if and only if there exists a continous family of bases containing these two bases|| it really lets you grasp the intuition).

It took me a while to feel the linearity of volume. (easy to see in 2D, not so obvious in 3D), a picture for that would make your work yet better.

Pingback: TWSB: We’re In the Matrix | Eigenblogger

Thanks a lot! Really helped me understand the determinant 🙂

It’s really an excellent explanation! Thank you so much!

But I still have a question. How is property 3) true? I mean I know it’s linear, but in the sense of “volume”, it’s not so obvious and it can be confusing.

@ Ed:

The easiest way to see property 3 is probably to move the vector combination in question from the first column to the last column. Also in the interest of expediency I will only consider the absolute value of the determinant (e.g. a determinant of +7 and -7 are the same for my purposes here).

Like most things involving matrices, orthogonality allows you to quickly cut to the issue.

So, first QR factorize A. So . Note when multiplying two matrices, say A and B, det(AB) = det(A)det(B).

. Note when multiplying two matrices, say A and B, det(AB) = det(A)det(B).

So if we do , then

, then  . Note that Q and its transpose are orthonormal, and so they have a determinant of 1.

. Note that Q and its transpose are orthonormal, and so they have a determinant of 1.

The eigenvalues of R will be quite different from A, but . And R is triangular so each of its diagonal elements is a eigenvalue. The determinant is, then, the product of those diagonal elements. Further if before factorizing, you had split out that final column vector into a separate w component and separate v, the question would be, is separate v linearly independent from the rest of the column in the matrix it is in? If it is, then we can see it’s contribution to the determinant via the bottom right corner entry in R. Similar question with w. Note that if either or both v and w are not linearly dependent, then the associated value in the bottom right cell of ‘their R’ is 0, and hence the associated determinant is zero.

. And R is triangular so each of its diagonal elements is a eigenvalue. The determinant is, then, the product of those diagonal elements. Further if before factorizing, you had split out that final column vector into a separate w component and separate v, the question would be, is separate v linearly independent from the rest of the column in the matrix it is in? If it is, then we can see it’s contribution to the determinant via the bottom right corner entry in R. Similar question with w. Note that if either or both v and w are not linearly dependent, then the associated value in the bottom right cell of ‘their R’ is 0, and hence the associated determinant is zero.

Conclusion: if the product of the all eigenvalues but the last one in the bottom right is P, then v’s contribution * P + w’s contribution * P = (v’s contribution + w’s contribution)*P. That is the linear combination mentioned in property number 3.

What is means of rank in matrix