The Original Question Was: I have machine … and when I press a button, it shows me one object that it selects randomly. There are enough objects that simply pressing the button until I no longer see new objects is not feasible. Pressing the button a specific number of times, I take a note of each object I’m shown and how many times I’ve seen it. Most of the objects I’ve seen, I’ve seen once, but some I’ve seen several times. With this data, can I make a good guess about the size of the set of objects?

Physicist: It turns out that even if you really stare at how often each object shows up, your estimate for the size of the set never gets much better than a rough guess. It’s like describing where a cloud is; any exact number is silly. “Yonder” is about as accurate as you can expect. That said, there are some cute back-of-the-envelope rules for estimating the sizes of sets witnessed one piece at a time, that can’t be improved upon too much with extra analysis. The name of the game is “have I seen this before?”.

The situation in question.

Zero repeats

It wouldn’t seem like seeing no repeats would give you information, but it does (a little).

How many times do you have to randomly look at cards before they start to look familiar?

The probability of seeing no repeats after randomly drawing K objects out of a set of N total objects is . This equation isn’t exact, but (for N bigger than ten or so) it’s way too close to matter.

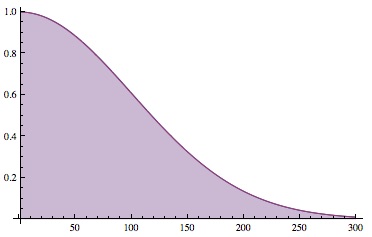

The probability of seeing no repeats after K draws from a set of N=10,000 objects.

The probability is one for K=0 (if you haven’t looked at any objects, you won’t see any repeats), it drops to about 50% for and about 10% for

. This gives us a decent rule of thumb: in practice, if you’re drawing objects at random and you haven’t seen any repeats in the first K draws, then there are likely to be at least

objects in the set. Or, to be slightly more precise, if there are N objects, then there’s only about a 50% chance of randomly drawing

times without repeats.

Seeing only a handful of repeats allows you to very, very roughly estimate the size of the set (about the square of the number of times you’d drawn when you saw your first repeats, give or take a lot), but getting anywhere close to a good estimate requires seeing an appreciable fraction of the whole.

Some repeats

So, say you’ve seen an appreciable fraction of the whole. This is arguably the simplest scenario. If you’re making your way through a really big set and 60% (for example) of the time you see repeats, then you’ve seen about 60% of the things in the set. That sounds circular, but it’s not quite.

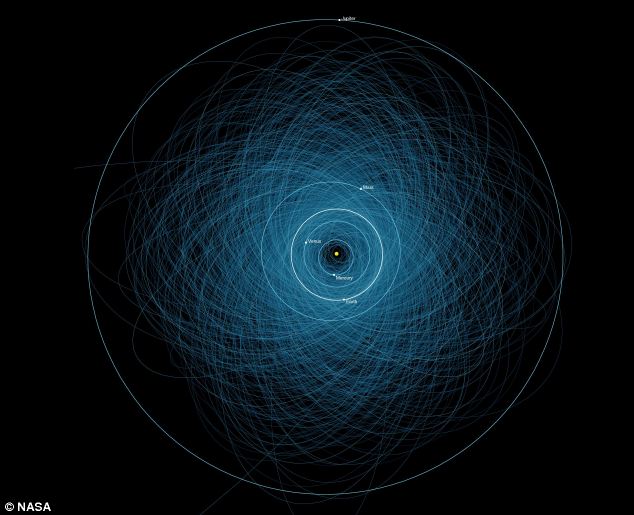

The orbits of 14,000 worrisome objects.

For example, we’re in a paranoia-fueled rush to catalog all of the dangerous space rocks that might hit the Earth. We’ve managed to find at least 90% of the Near Earth Objects that are over a km across and we can make that claim because whenever someone discovers a new one, it’s already old news at least 90% of the time. If you decide to join the effort (which is a thing you can do), then be sure to find at least ten or you probably won’t get to put your name on a new one.

All repeats

There’s no line in the sand where you can suddenly be sure that you’ve seen everything in the set. You’ll find new things less and less often, but it’s impossible to definitively say when you’ve seen the last new thing.

When should you stop looking for something new at the bottom?

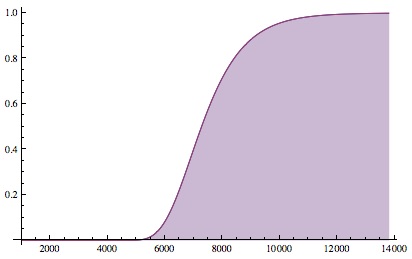

I turns out that the probability of having seen all N objects in a set after K draws is approximately , which is both admittedly weird looking and remarkably accurate. This can be solved for K.

When P is close to zero K is small and when P is close to one K is large. The question is: how big is K when the probability changes? Well, for reasonable values of P (e.g., 0.1<P<0.9) it turns out that is between -1 and 1. You’re likely to finally see every object at least once somewhere in

. You’ll already know approximately how many objects there are (N), because you’ve already seen (almost) all of them.

The probability of seeing every one of N=1000 objects at least once after K draws. This ramps up around Nln(N)≈6,900.

So, if you’ve seen N objects and you’ve drawn appreciably more than times, then you’ve probably seen everything. Or in slightly more back-of-the-envelope-useful terms: when you’ve drawn more than “K = 2N times the number of digits in K” times.

Answer Gravy: Those approximations are a beautiful triumph of asymptotics. First:the probability of seeing every object.

When you draw from a set over-and-over you generate a sequence. For example, if your set is the alphabet (where N=26), then a typical sequence might be something like “XKXULFQLVDTZAC…”

If you want only the sequences the include every letter at least once, then you start with every sequence (of which there are ) and subtract all of the sequences that are missing one of the letters. The number of sequences missing a particular letter is

and there are N letters, so the total number of sequences missing at least one letter is

. But if you remove all the sequences without an A and all the sequences without a B, then you’ve twice removed all the sequences missing both A’s and B’s. So, those need to be added back. There are

sequences missing any particular 2 letters and there are “N choose 2” ways to be lacking 2 of the N letters. We need to add

back. But the same problem keeps cropping up with sequences lacking three or more letters. Luckily, this is not a new problem, so the solution isn’t new either.

By the inclusion-exclusion principle, the solution is to just keep flipping sign and ratcheting up the number of missing letters. The number of sequences of K draws that include every letter at least once is which is the total number of sequences, minus the number that are missing one letter, plus the number missing two, etc. A more compact way of writing this is

. The probability of seeing every letter at least once is just this over the total number of possible sequences,

, which is

The two approximations are asymptotic and both of the form . They’re asymptotic in the sense that they are perfect as n goes to infinity, but they’re also remarkably good for values of n as small as ten-ish. This approximation is actually how the number e is defined.

This form is simple enough that we can actually do some algebra and see where the action is.

Now: the probability of seeing no repeats.

The probability of seeing no repeats on the first draw is , in the first two it’s

, in the first three it’s

, and after K draws the probability is

The approximations here are , which is good for small values of x, and

, which is good for large values of K. If K is bigger than ten or so and N is a hell of a lot bigger than that, then this approximation is remarkably good.

This is how biologist estimate populations of things like fish. Capture and tag and release and see how often you capture the same fish twice.

There might be biases, e.g., one biting twice shy.

The “German tank problem” is an interesting twist on this kind of problem.

See: https://en.wikipedia.org/wiki/German_tank_problem

Congratulations! You have been nominated for the Versatile Blogger Award by Coffee & Quasars because they thought your blog was awesome.

Please follow this link to find out more about the award: https://coffeeandquasars.wordpress.com/2016/12/26/versatile-blogger-award/

Anne Chao (1984) came up with a formula that ecologists now use to estimate population size (or species diversity). Chao’s estimator Nc stipulates that N includes some (S0) individuals (or species) that have never been sighted, some (S1) that have been seen just once, some (S2) seen just twice, and maybe some (Sn)with n>2 sightings.

Chao’s formula uses just S1 and S2 to estimate S0 (the number of species NEVER sighted) as

S0 ≈ S1^2 / 2 S2.

That is,

N ≈ Nc = S1^2 / 2S2 + S1 + S2 + …

where Sn = # species observed n times.

The crux of the problem is we don’t know S0, which Chao estimated by the first term in that sum. More compactly, if S is the total number of species ever sighted,

N ≈ Nc = S + (S1^2) / (2*S2).

How precise is the (2-count) Chao Estimator? Its variance (the square of the standard deviation) is:

var(Nc(S1,S2))=

S2*[(r/4)^4 + r^3 + (r/2)^2]

where

r=(S1/S2)

–Generalized S0–

If Sm species are seen m times (and none are seen more): Number of species (including those seen zero times, S0) is

S0+S1+S2+… +Sm, where

S0 ~ D2 = S1^2 / 2 S2

which Chao (2004) generalized, for n in [2,m], to S0 ~

Dn = (S1^n / n! Sn) ^ (1/(n-1)).

ex. 1,0,8;9 -> N~9+(8^3/6)^0.5

=18.2 (best est. when S2=0).

But the numbers seen just once and twice (if any) give the most robust estimate for the number not yet seen:

S0 ≈ S1^2 / 2 S2.

Eample: I’ve plotted successive estimates of the number of active online Scrabble players over the past couple of years. At present, (N2,N1,N) = (28, 485, 519) ——>

Nchao = 4719 ± 778 active players (95% confidence interval).